FaaS on Kubernetes: From AWS Lambda & API Gateway To Knative & Kong API Gateway

Serverless functions are modular pieces of code that respond to a variety of events. It’s a fast and efficient way to run single-purpose services/functions. Although you can run “fat-functions” within them, I prefer the single-responsibility functions, that can be grouped in one endpoint using an API Gateway. Developers benefit from this paradigm by focusing on code and shipping a set of functions that are triggered in response to certain events. No server management is required and you can benefit from automated scaling, elastic load balancing, and the “pay-as-you-go” computing model.

Deploying a single function usually completes in less than a minute. This is a huge improvement in development and delivery speed, compared to most other workflows/patterns.

Kubernetes, on the other hand, provides a set of primitives to run resilient distributed applications using modern container technology. Using Kubernetes requires some infrastructure management overhead and it may seem like a conflict putting serverless and Kubernetes in the same box.

Hear me out. I come at this with a different perspective that may not be evident at the moment.

Serverless is based on the following tenets:

- no server management

- pay-for-use services

- automatic scaling

- built-in fault tolerance

You get auto-scaling and fault tolerance in Kubernetes, and using Knative makes this even simpler. While you take on some level of infrastructure management, you are not tied to any particular vendor’s serverless runtime, nor constrained to the size of the application artefact.

Serverless Function With Knative

There’s so much to be said about Knative than I can cover in two sentences. Go to knative.dev to learn more. This post aims to show you how you can run serverless functions with Knative. The aim is to show people who are familiar with AWS Lambda and API Gateway, how to build and deploy functions, then expose them via a single API.

This will be based on a Knative installation with Kong Ingress as the networking layer. Go to this [URL] (link no longer available) for steps on how to install and use Kong with Knative.

Pre-requisite

I’m going to walk you through building a simple URL shortening service in Node.js. You will need Knative and Kong set up on your Kubernetes cluster, and the following tools if you want to code along.

- Pack CLI

- Docker or a similar tool, e.g Podman

- Node.js (version 16.10 or above) and npm

Project Set-Up

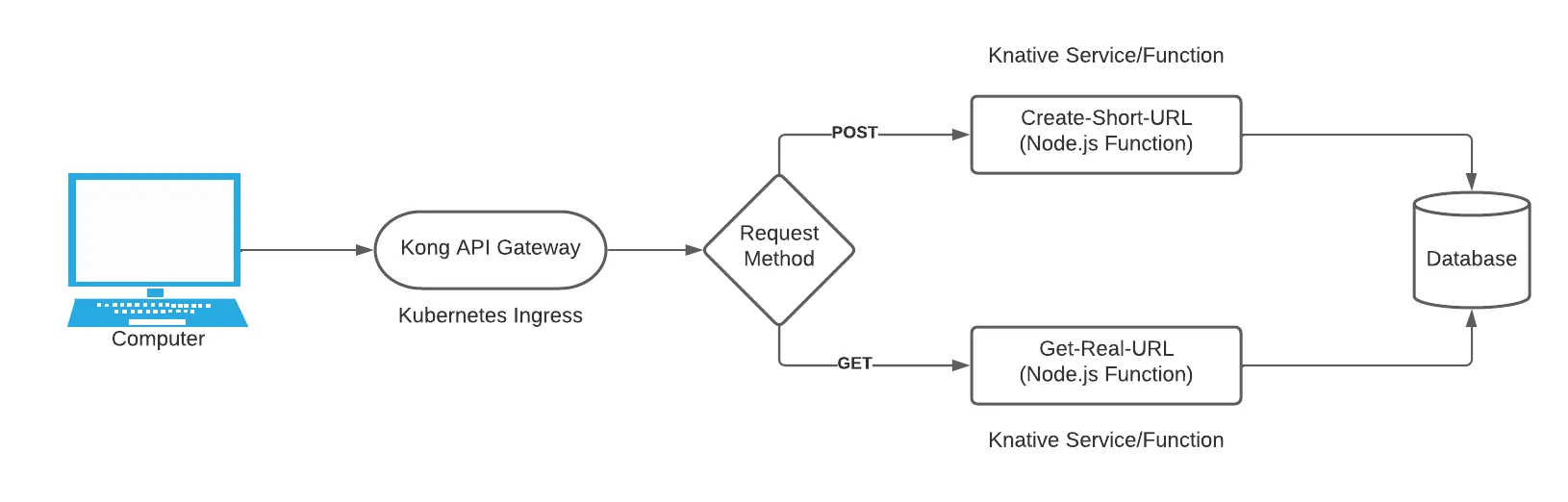

We’re going to create a monorepo with two functions, one to generate a shortened URL and another to process a shortened URL and redirect the user. Using a monorepo makes it easy to manage a group of functions that you want to expose via a single API endpoint.

The diagram above depicts how the request would flow from the user to the Kong Ingress controller. The Ingress controller will route the traffic to the right service based on the HTTP method.

We’re going to use Nx to manage the monorepos. Run the command npm install -g nx to install nx CLI globally. Now create the monorepo workspace by running the command below:

npx create-nx-workspace@latest tinyurl --preset=core --nx-cloud=false --packageManager=npmA workspace named tinyurl is created with the following file structure:

packages / nx.json;

workspace.json;

tsconfig.base.json;

package.json;We’re going to make a few changes to the files. First, delete the workspace.json file and packages/ directory. Open package.json and update the workspaces key to the value below:

"workspaces": [

"functions/**"

]These changes causes Nx to treat the workspace as a regular npm workspace, and you can invoke the scripts in each project’s package.json using Nx.

The generate-tinyurl function

We’re going to use kazi to generate and deploy the functions. Kazi is a CLI that helps you to build and deploy serverless functions to Knative. You can create functions and deploy them using the CLI. It’s still a pretty new tool with a few handy commands to create, deploy and retrieve functions deployed on Knative.

The function runtime is based on a fast, and lightweight HTTP library called micro. To use kazi, you first have to install it via npm. Run the command npm i -g @kazi-faas/cli to install it.

The first function we’re going to create will be triggered by a POST request. It’ll get the URL to shorten from the request body, generate a unique code for it, save the data to a DB, then return the shortened URL in the response.

Open your terminal and browse to your workspace directory. Then run the command kazi create functions/generate-tinyurl --registry=YOUR_REGISTRY_NAMESPACE --workspace-install to scaffold the project. Replace YOUR_REGISTRY_NAMESPACE with your container registry endpoint. For example, docker.io/jenny. This command will create a new Node project with the following file structure:

config.json;

index.js;

package.json;

README.md;The config.json stores the configuration for building source code and deploying it to Knative. At the moment it has just two values, name and registry. name is used by kazi as the image and Knative Service name. The registry value is the container registry to publish the image.

The index.js file contains the function to handle incoming request. Open index.js and add the following require statements:

const { json, send } = require("micro");

const { isWebUri } = require("valid-url");

const { nanoid } = require("nanoid");

const { db, q } = require("./db");The db module is used to interact with a Fauna database. We will get to that in a moment. For now, open your terminal and navigate to your workspace directory. Install the required packages using the command below.

npm i valid-url nanoid faunadb -w generate-tinyurlGo back to index.js and update the function with the code below.

module.exports = async (req, res) => {

const { url } = await json(req);

if (!isWebUri(url)) {

send(res, 401, "Invalid URL");

} else {

const code = nanoid(10);

await db.query(

q.Create(q.Collection("tinyurls"), {

data: { url, code },

}),

);

return { shortUrl: `${process.env.BASE_URL}/${code}`, originalUrl: url };

}

};The code above simply retrieves the URL from the request body, save the data to the database, and return a response to the user.

The json() function is used to parse the request body and retrieve the url. Afterwards, the code checks if the URL is valid and returns 401 if it’s invalid. If the URL is valid, a unique string is generated. This string is used as the identifier for the URL.

The code and url is saved to the database, and a response containing the shortened URL is returned as a response.

Connect To The Database

Next, add a new file /generate-tinyurl/db.js and paste the code below in it.

const faunadb = require("faunadb");

exports.q = faunadb.query;

exports.db = new faunadb.Client({

secret: process.env.FAUNADB_SECRET,

domain: process.env.FAUNADB_ENDPOINT,

port: 443,

scheme: "https",

});This code connects to FaunaDB using the faunadb JS client. The secret and domain values are retrieved from environment variables. You can use an existing database or follow these steps to create a new Fauna database

- Go to your Fauna dashboard and create a new database.

- Create a Collection named tinyurls.

- Click SECURITY in the left-side navigation menu and create a new key for your database. Be sure to save the key’s secret in a safe place, as it is only displayed once.

- Go to the tinyurls collection and create an index named

urls_by_codewith the terms set tocode. This will allow you to query the DB using an index that checks thecodeproperty in the document.

Add Environment Variables

Create a new .env file in the generate-tinyurl directory. Here you will add the necessary environment variables. The values in this file are automatically loaded when you’re running locally (see the dev script in package.json), and are saved in your cluster (using ConfigMap objects) when you deploy.

Add the following key-value pair to the .env file.

FAUNADB_SECRET = YOUR_SECRET_KEY;

FAUNADB_ENDPOINT = db.fauna.com;

BASE_URL = YOUR_API_DOMAIN;Replace YOUR_SECRET_KEY with the secret generated from the previous section. The FAUNADB_ENDPOINT endpoint should be changed to reflect the region where the database was created. That is, db.us.fauna.com for the US region or db.eu.fauna.com for the EU region.

The BASE_URL is the domain from which the service is accessible. This is the domain you’ll use when you configure an Ingress resource for your service. You can fill it out if you already have an idea, or update it after you’ve created the Ingress. For example, I’m using a local Kubernetes cluster and have set mine to BASE_URL=tinyurl.localhost.

The resolve-tinyurl function

It’s time to create the second function that will resolve the shortened URL and direct the user to the original URL. Create the project using the command kazi create functions/resolve-tinyurl --registry=docker.io/pmbanugo --workspace-install. Replace YOUR_REGISTRY_NAMESPACE with your container registry endpoint.

Install the faunadb package using the command npm i faunadb -w resolve-tinyurl.

Copy the db.js and .env files from the other project to this one. You could have the db.js module in a separate project, that both function projects can use. But for the sake of this post, I’ll duplicate the code.

Open functions/resolve-tinyurl/index.js and update it with the code below.

const { send } = require("micro");

const { db, q } = require("./db");

module.exports = async (req, res) => {

const code = req.url.substring(1);

try {

const {

data: { url },

} = await db.query(q.Get(q.Match(q.Index("urls_by_code"), code)));

res.setHeader("Location", url);

send(res, 301);

} catch {

send(res, 404, "No URL Found");

}

};The code above extracts the unique code from the URL and uses that to query the database. If there’s no result, we return a 404 status. Otherwise, the Location header is set and a 301 redirect status is returned.

Deploy The Functions

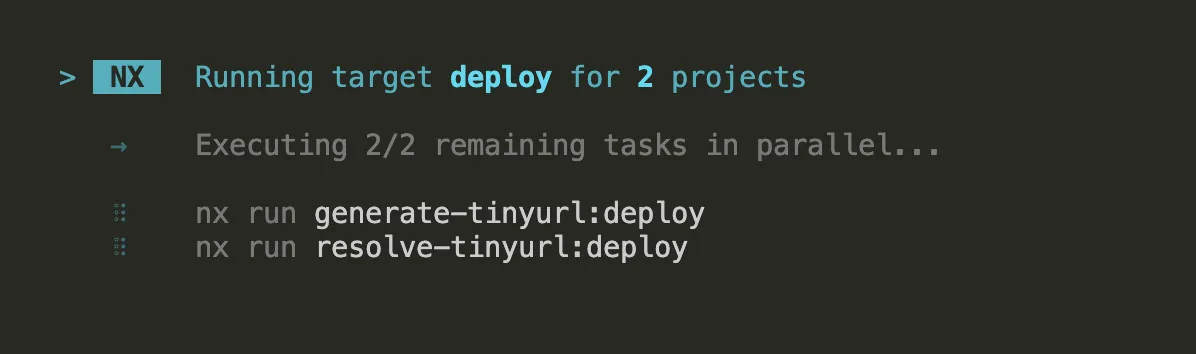

Now that the functions are ready, the next thing to do is deploy them. There’s a deploy script in each function’s package.json, which executes the kazi deploy command. Before you run this script, you will update the nx.json file so that the result of this script is cached by Nx. That way, running the deploy script multiple times without any file changes will be faster.

Go to the workspace root directory and open the nx.json file. Add deploy to the cacheableOperations array values.

"cacheableOperations": ["build", "lint", "test", "e2e", "deploy"]Next, open the root package.json and add the script below:

"scripts": {

"deploy": "nx run-many --target=deploy --all"

},This command will execute the deploy command for every project. Now run npm run deploy in the workspace root to execute this script. This will execute both scripts in parallel just like you can see in the screenshot below.

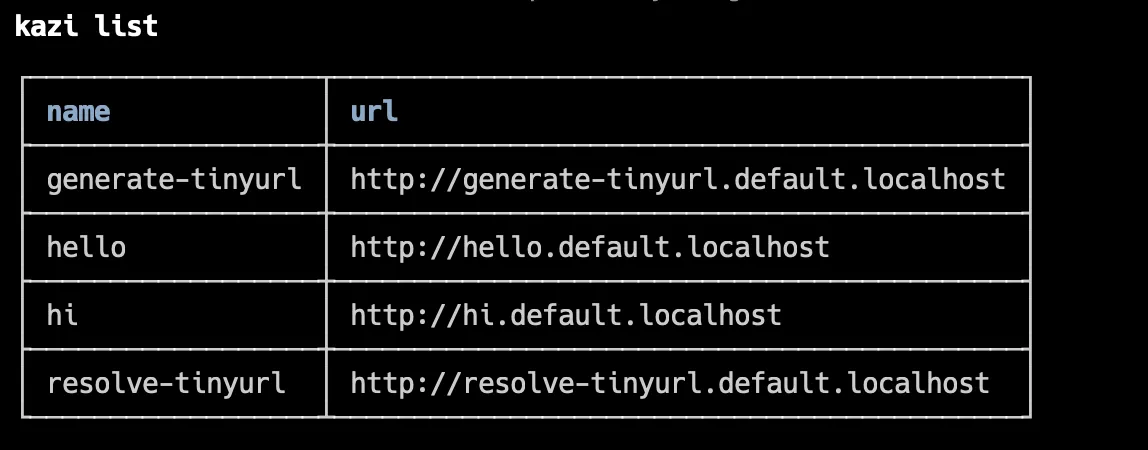

When it is done, you should get a Successfully ran target deploy for 2 projects in the terminal. You can very that it was deployed by running the command kazi list. It will return a list of functions deployed using the CLI.

In the screenshot above, you can see that the generate-tinyurl function is available at http://generate-tinyurl.default.localhost, and the resolve-tinyurl function at http://resolve-tinyurl.default.localhost (I’m running a local Kubernetes cluster ;) ).

It’s possible to create these functions as services that are accessible only within the cluster, and then expose them via an API Gateway.

One Endpoint To Rule Them All

While you can access these functions with their respective URL, the goal here is to have a single endpoint where a specific path or HTTP method will trigger a function. To achieve this, we’re going to create an Ingress resource that will route GET requests to resolve-tinyurl and POST requests to generate-tinyurl.

First, create a new file kong-plugin.yaml and paste the YAML below in it.

# Create a Kong request transformer plugin to rewrite the original host header

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: generate-tinyurl-host-rewrite

# The plugin must be created in the same namespace as the ingress.

namespace: kong

plugin: request-transformer

config:

add:

headers:

- "Host: generate-tinyurl.default.svc.cluster.local"

replace:

headers:

- "Host: generate-tinyurl.default.svc.cluster.local"

---

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: resolve-tinyurl-host-rewrite

# The plugin must be created in the same namespace as the ingress.

namespace: kong

plugin: request-transformer

config:

add:

headers:

- "Host: resolve-tinyurl.default.svc.cluster.local"

replace:

headers:

- "Host: resolve-tinyurl.default.svc.cluster.local"The YAML above defines two Kong plugins that will rewrite the Host header for incoming requests. This is how the kong proxy knows which Knative service to proxy to.

Finally, create a new file ingress.yaml and paste the YAML below in it.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tinyurl-get

# The ingress must be created in the same namespace as the kong-proxy service.

namespace: kong

annotations:

kubernetes.io/ingress.class: kong

konghq.com/methods: GET

konghq.com/plugins: resolve-tinyurl-host-rewrite

spec:

rules:

- host: tinyurl.localhost

http:

paths:

- pathType: ImplementationSpecific

backend:

service:

name: kong-proxy

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tinyurl-post

namespace: kong

annotations:

kubernetes.io/ingress.class: kong

konghq.com/methods: POST

konghq.com/plugins: generate-tinyurl-host-rewrite

spec:

rules:

- host: tinyurl.localhost

http:

paths:

- pathType: ImplementationSpecific

backend:

service:

name: kong-proxy

port:

number: 80Here you defined two ingresses pointing to the same host, but using different plugins and methods. Replace tinyurl.localhost with tinyurl plus your Knative domain (e.g tinyurl.dummy.com).

Now open the terminal and run kubectl apply -f kong-plugin.yaml -f ingress.yaml to apply these resources.

Now reach for your HTTP client and send a POST request. For example, the following command will send a POST request to the service at tinyurl.localhost:

curl -X POST -H "Content-Type: application/json" \

-d '{"url": "https://pmbanugo.me"}' \

http://tinyurl.localhostThe response will be something similar to the following.

{

"shortUrl": "tinyurl.localhost/ppqFoY0rh6",

"originalUrl": "https://pmbanugo.me"

}Open the shortUrl in the browser and you should be redirected to https://pmbanugo.me.

Now you have a REST API where specific calls get routed to different functions that are scaled independently! How awesome can that be 🔥.

What Next?

In this post, I showed you how to build and deploy a REST API powered by serverless functions running on Kubernetes. Most of this was made possible using Knative, Kong API Gateway, and kazi CLI. You maintained the monorepo using Nx, which is quite a handy tool for developing with monorepo. I briefly talked about some of these tools but you can read more about them using the following links:

kazi features are still minimal at the moment but there will be more features added in the near future, with more in-depth documentation. I will be sharing more of it here as new features get added. You can follow me on Twitter or subscribe to my newsletter if you don’t want to miss these updates 😉.

You can find the complete source code for this example on GitHub